Aerospace & Defence

Project: Day, Night, All Weather Synthetic Vision Systems

Summary

Helicopters are routinely used by the Emergency Services, Military and other operators to tackle extremely difficult challenges where human life is at stake. For example, the Emergency Services use helicopters to gain access to difficult to reach and frequently hazardous areas that cannot be reached by any other means. Time is also often critical in extracting an injured person and transporting them quickly to a hospital for life saving treatment. Operating scenarios for these helicopters range from very good visibility to extremely hazardous conditions often down to conditions of near zero visibility, and at very low level (below tree top height). Unfortunately, operating at these extreme levels places teh lives of the helicopter crew at risk and sometimes a mission has to be aborted due to poor visibility.

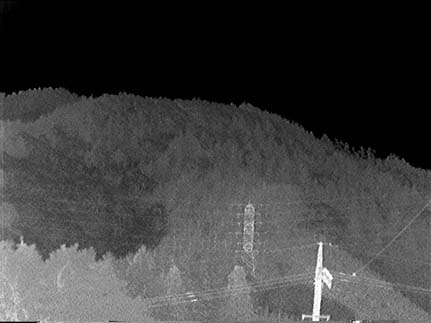

The environment in which the helicopter is required to operate is full of hazards that teh aircrew need to be aware of and comprise telegraph wires, aerial masts, terrain and building structures. Not all of these hazards are known beforehand (ie stored on a database). Therefore, a sophisticated means of detecting these hazardous features needs to be carried aboard the helicopter. The big challenge is the way these features are presented to the aircrew whilst they are flying at speed and with sufficient warning so that they can take evasive action. Optimal integration of the different sensory technologies needs to be undertaken so that the pilot has sufficient reaction time to fly the aircraft safely and avoid the obstacles.

Aims & Objectives

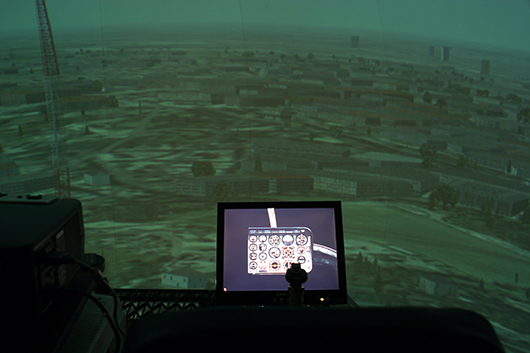

- Creation of a comprehensive coupled-simulator integrating real-time models of teh atmosphere, sensor performance, system performance, helicopter flight dynamics, synthetic vision display presentation and models of human reaction time/behaviour.

- Integrity assessment to address the issue of spatial awareness and range to interference in obscured visibility conditions

- Estimation of integrity of the synthetic information provided to the pilot in normal and failure conditions

- Undertake a study of the implications and rationale of 2000m visibility (dictated by air regulations)

- Create a mathematical model of spatial error in relation to the terrain profile

- Determine range to interference in obscured visibility conditions, without the use of NVG.

- To estimate the integrity of the synthetic information provided to the pilot in normal and failure conditions

- To create a computational model of spatial error in relation to the terrain profile.

- To validate the complex coupled simulation model

- Sensor modelling & simulation (inc atmospheric condition modelling)

- Development of range to interference model

- Modelling of crew interaction and response

- Analysis of multi sensor – integrated cues

- Analysis of whole system performance (architectural, timing, information presentation, crew reaction time etc)

- Support to safety case studies

- Underpinning simulator studies

System Overview

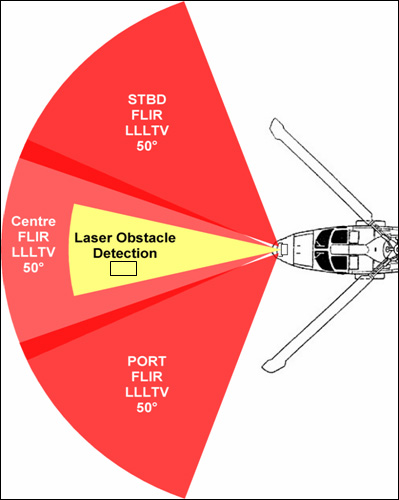

Due to commercial reasons it is not possible to detail the architecture of teh synthetic vision system other than to say that it comprised three forward looking infra-red sensors, three low light TV sensors, a scanning laser obstacle warning system, GPS and other navigational sensors.

Results

Sensory information was processed and integrated with other sensory and on on-board database information to create hazard/obstacle warning cues for the pilot. Couplng the sumulator with models of the atmosphere allowed symbology design to be optimised for different environmental conditions prior to suggesting candidate symbology suites for use in airborne trials. Unfortunately, we are not permitetd to provide examples of syntehic vision display symbology we developed for the project snce this is commercial protected.

Conclusions

Model based systems engineering techniques were adopted to both express the simulator architecture and to model the constituent systems. Our work undertaken in the e-Science Reality Grid project was adapted to help define the computational architecture for the synthetic vision simulator. In particular, the human factors research aspects of the research we undertook within the RealityGrid project were directly applicable to this project in creating a representaive visually coupled system. Regular meetings between the research team and flight trials people ensured the AVRRC simulator tracked very closely with the trials programme so that risks could be identified before trials took place.

Impact

BAE Systems placed an experienced engineer into our research team - this proved to be a very effective approach to knowledge transfer and ensured our research was more easily interared into the extensive flight trials programme. This also ensured our system models were as representatve as possible of the airboarne test environment.

The project was extremely successful and the AVRRC based laboratory risk reducing research saved millions of pounds in term sof costsavings on teh flight trials programme. Also teh simulation environment proved to be a great environment in which to prototype and test novel synthetic vision system concepts prior to flightt trials.

Download a pdf containing this information