As part of the IAS Annual Theme 'AI:Facts, Fictions, Futures', this virtual event will bring together a range of academics to discuss the Impact and Future of AI

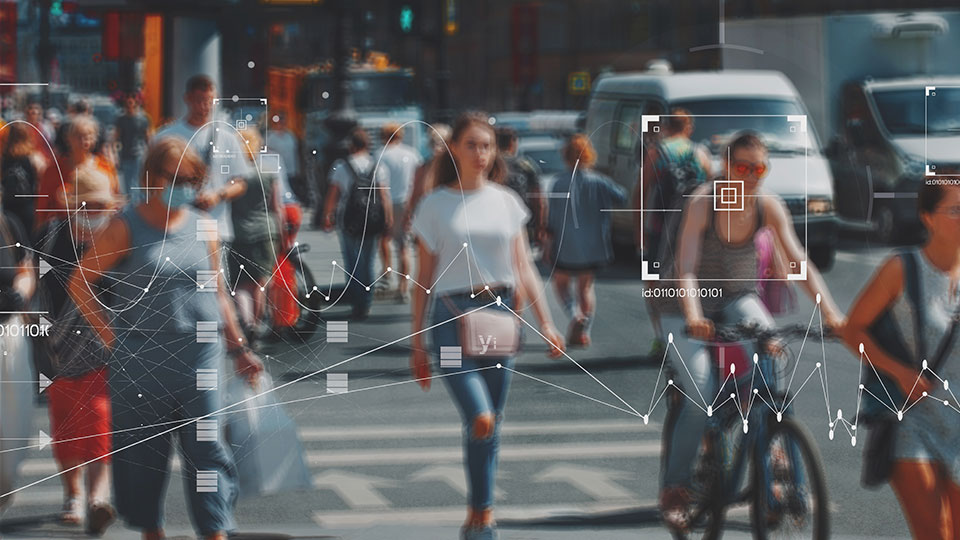

Voices in the academia, technology industry and civil society are increasingly critical of the implications raised by the growing pervasiveness and ubiquity of AI in all domains of social, economic and political life. From racial bias and discrimination to politically motivated decision-making algorithms, a plethora of high-profile cases appear to justify the critique that after all, the design and deployment of AI is all too human, hence subject to human bias and fallacy. As we approach the closing of the IAS Annual Theme series on AI: Facts, Fictions, Futures, this event will bring together academics in the social sciences, humanities and technology studies to debate the current impacts of AI and suggest principles for alternative futures.

Convened by: Dr Amalia Sabiescu, Dr Lise Jaillant & Dr Adrian Leguina

IAS Visiting Fellows in residence:

Prof Veronica Barassi, University of St Gallen, Switzerland

Dr Stephen Cave, Leverhulme Centre for the Future of Intelligence, Cambridge University

Prof Virginia Dignum, Umeå University, Delft University of Technology, The Netherlands

Prof Lauren Klein, Emory University, USA

Prof Jonathan Roberge, National Institute of Scientific Research, Canada

For more details on the IAS Annual Theme of AI: Facts, Fictions, Futures, please click here.

Programme:

|

10.30 |

Refreshments and welcome. Introductory Remarks Prof Marsha Meskimmon, Director of the Institute of Advanced Studies (IAS) Dr Amalia Sabiescu, Dr Lise Jaillant & Dr Adrian Leguina |

|

10:45 |

Parrots all the Way Down: Controversies within AI’ Conquest of Language Jonathan Roberge In-person presentation and Q&A |

|

11:30 |

The Political Life of AI Errors? Mapping Debates, Beliefs and Alternative Imaginations Veronica Barassi In-person presentation and Q&A |

|

12:15 |

Lunch and informal discussions |

|

13:10 |

Reconvene |

|

13:15 |

The Problem with Intelligence: Its Value-Laden History and the Future of AI Stephen Cave, University of Cambridge Virtual presentation and Q&A |

|

14:00 |

Why AI Needs the Humanities Lauren Klein, Emory University (USA) Pre-recorded virtual presentation (20 min) |

|

14:30 |

Responsible AI: From Principles to Action Virginia Dignum In-person presentation and Q&A |

|

15:15 |

Concluding remarks |

|

15:30 |

Refreshments and informal discussions |

Professor Jonathan Roberge, National Institute of Scientific Research, Canada

Parrots All the Way Down: Controversies within AI' Conquest of Language

The departure/firing of Google’s researcher Timnit Gebru right after she submitted “On the Danger of Stochastic Parrots: Can Language Models Be Too Big?” (2020) came as a shock across the AI community, raising several ethics and working relationship issues. Yet fewer critiques have taken up on Gebru’s own question: Have AI and the latest development in deep learning created (too) big, powerful, and deep models? What does it mean to have too big, too powerful, and too deep models? Our proposed analysis will be three-folded: (a) First, we will examine these recent models as social constructions and socio-technical objects. BERT (Bidirectional Encoder Representations Form Transformer) was first introduced by Google in 2018 to be then integrated into its search engine. GPT-3 collects information across the web (e.g., Wikipedia) and parses it both from right-to-left and from left-to-right to identify parallel connections and predict missing terms. OpenAI's GPT-3 (Generative Pretrained Transformer) is even more recent; with its 175 billion training settings, the deep learning calculator is known to be 400 times more powerful than Google’s BERT. Not only does it “decode” input, but it also encodes textuality; thereby opening possibilities for automated writing, like writing news reports. (b) Second, it is precisely the meaning invested by automation that could be interrogated. What is the epistemological conception that is promoted throughout these data architectures and statistical regressions? How is language mediated and understood? In a nutshell, how are meanings circulating in these types of connectionist and cybernetic vision? For authors such as Bloomfield, Idhe, Introna, Mallery, or Woolgar, and those that coalesce today around Critical AI Studies (CAIS) (i.e., Castelle, Pasquinelli, and Roberge, among others), the fundamentally ambiguous, if not misleading nature of AI language models makes them the equivalent of Hans le Malin—the so-called “smart” horse that was thought to be capable of answering arithmetic questions at the beginning of the 20th century, but was in reality only responding to its owner’s stimuli. (c) Third, framed as interpretative devices that are problematic, yet perfectly useful, BERT or GPT-3 must be questioned as political objects. These models have become an integral part of everyday life; they are purposefully deployed as part of a digital political economy, in which platforms are key players, that ought to be contextualized. This is precisely why Gebru’s case is relevant, as she concisely puts it Twitter when she challenged her former boss at Google, Jeff Dean: “@jeffdean I realize how much large language models are worth to you now.”

Professor Veronica Barassi, University of St Gallen, Switzerland

The Political Life of AI Errors? Mapping Debates, Beliefs and Alternative Imaginations

Over the last years, we have seen the emergence of academic researchers, civil society organizations, policy makers, and critical tech entrepreneurs who argue that AI systems are often shaped by systemic inequalities (Virginia Eubanks, Catherine D’Ignazio, Lauren Klein and Kate Crawford to mention just a few), by racial biases and discrimination (Simone Browne, Safiya Noble and Ruha Benjamin) and an inaccurate analysis of human practices and intentions (Veronica Barassi). The combination of bias, inaccuracy and lack of transparency implies that AI systems will always be somehow fallacious in reading humans. This ‘human error of AI’ can have a profound impact on individual lives and human rights. As scholars Claudia Aradau and Tobias Blanke have rightly highlighted, concerns with errors, mistakes, and inaccuracies have shaped political debates about what technologies do and where and how certain technologies can be used and for which purposes. Yet little attention has been placed on the study of error and its political life, especially in relation to AI. According to them, when it comes to AI error, we need to have a “history of the present” and understand how it becomes a problem, raises discussion and debate, incites new reactions, and induces a crisis in the previously silent behaviour, habits, practices, and institutions.

In this talk, Professor Barassi will speak about 'the political life of AI errors' by relating the findings of a project that she launched in 2020, titled The Human Error Project: AI, Human Rights and the Conflict over Algorithmic Profiling which investigates the struggles, practices and discourses emerging on the issue of AI error and highlights how different actors are negotiating with these problems and looking for solutions. This talk will present initial findings from this project and will reflect on how some case studies of AI error have become a conflicting terrain for the construction of new meanings about systemic inequality in society and the importance of imagining alternatives.

Associate Professor Lauren Klein, Emory University, USA

Why AI Needs the Humanities

What is the current state of the field at the intersection of AI and the humanities, and why are humanities scholars essential for AI research? This talk will draw from Klein's recent book, Data Feminism (MIT Press), coauthored with Catherine D’Ignazio, as well as her current research at the intersection of the humanities and AI. She will discuss a range of recent research projects including several of her own: 1) a thematic analysis of a large corpus of nineteenth-century newspapers that reveals the invisible labor of women newspaper editors; 2) the development of a model of lexical semantic change that, when combined with network analysis, tells a new story about Black activism in the nineteenth-century United States; and 3) an interactive book on the history of data visualization that shows how questions of politics have been present in the field since its start. Taken together, these examples demonstrate how the attention to history, culture, and context that characterizes humanities scholarship can not only be translated into the AI research space, but is in fact necessary if AI is to address today’s most urgent research questions—those having to do with the ethical design and deployment of AI systems, their potential for bias, and their transformative possibilities.

Dr Stephen Cave, Leverhulme Centre for the Future of Intelligence, Cambridge University

The Problem with Intelligence: Its Value-Laden History and the Future of AI

This talk argues that the concept of intelligence is highly value-laden in ways that impact debates about AI and its risks and opportunities. This value-ladenness stems from the historical use of the concept of intelligence in the legitimation of dominance hierarchies. I highlight five ways in which this ideological legacy might be having impact: 1) how some aspects of the AI debate perpetuate the fetishization of intelligence; 2) how the fetishization of intelligence impacts on diversity in the technology industry; 3) how certain hopes for AI perpetuate notions of technology and the mastery of nature; 4) how the association of intelligence with the professional class misdirects concerns about AI; and 5) how the equation of intelligence and dominance fosters fears of superintelligence.

Professor Virginia Dignum, Umeå University, Delft University of Technology

Responsible AI: from Principles to Action

Every day we see news about advances and the societal impact of AI. AI is changing the way we work, live and solve challenges but concerns about fairness, transparency or privacy are also growing. Ensuring AI ethics is more than designing systems whose result can be trusted. It is about the way we design them, why we design them, and who is involved in designing them. In order to develop and use AI responsibly, we need to work towards technical, societal, institutional and legal methods and tools which provide concrete support to AI practitioners, as well as awareness and training to enable participation of all, to ensure the alignment of AI systems with our societies' principles and values.

Contact and booking details

- Name

- Kieran Teasdale

- Email address

- ias@lboro.ac.uk

- Cost

- Free

- Booking information

- Please register via Zoom to join this webinar using the 'Book Now' link above